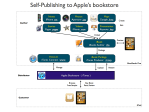

Over the last couple of weeks I finished writing the book about my adventure of a lifetime: Panamerican Peaks, cycling from Alaska to Patagonia and climbing the highest mountain of every country along the way. By now I have successfully self-published the book to the Apple bookstore. This post gives a recap of the steps involved in that process, with a focus on the tools, logistics and finally some numbers and sales stats.

Disclaimer: In my personal life I am an avid Apple fan, and this post is heavily biased towards Apple products. In particular, the eBook is only available for the iPad. So the tools and publishing route described below may not be for everybody, but the process and lessons learnt may still be of interest.

Creating Content

The first step is obviously to create, select and edit the content of the book. During the actual trip I tried documenting my experiences via the following:

- Taking about 10,000 photos with digital camera (Olympus and Panasonic)

- Taking daily notes with riding or climbing stats (on iPhone or NetBook)

- Shooting about 200 video clips (Flip Mino)

- Uploading photos (to Picasa) and videos (to YouTube)

- Writing posts on my personal Blog

In the months after coming home I refined some of the above material. Using iMovie I created ~ 5 min long movies based on video clips, photos and map animations, typically with some iTunes song in the background and a bit of explanatory text or commentary. I shared those videos on my personal Blog and on my Panamerican Peaks YouTube channel.

I loaded all photos into Aperture on our iMac and tagged and rated them. That allowed me to organize them by topic or as required. The ‘Smart Folders’ feature of Aperture comes in handy here, as it allows to set up filters and select a subset of photos without having to copy them. For example, if I wanted photos rated 4 stars or higher related to camping, or photos of mountains in Central America, I just needed to create another Smart Folder. This was very useful for example for the Panamerican Peaks Synopsis video which features quick photo sets by topics (cycling, climbing, camping, etc.).

Google Earth proved to be a very useful tool as I could easily create maps of the trip based on the recorded GPS coordinates from my SPOT tracker. One can even retrace the trip in often astonishing detail thanks to Google Street View. For example, in many places along the Pacific Coast I can look at campgrounds or road-side restaurants where I stopped during my journey. I even created a video illustrating the climbing route on Mount Logan from within Google Earth.

The heart and soul of any book is of course the story and the text used to tell it. I created multiple chapters using MS Word because I am so used to it, but one can of course use any modern text writing tool. In addition, I created some slides for presentations I gave last summer using Keynote.

Book Layout

Once all the ingredients were available, it was time to compose the actual book. As I had decided to build an eBook for the iPad I used Apple’s new iBooks Author tool on my MacBook Pro. This meant choosing the layout and including the text and media. iBooks provides a few interactive widgets and accepts all widgets that can be installed into the OS X dashboard. This in particular allowed me to link to the various YouTube videos. I could always get a preview of the book copied out to my attached iPad 2.

After many weeks of busy work putting the finishing touches on the book and adding various edits from a few trusted friends I got to the point where I needed to figure out how to get the book published in Apple’s bookstore. There are two steps required here:

- Creating a developer account with Apple via iTunes Connect

- Managing one’s content via iTunes Producer

The creation of the account is fairly straightforward through the web browser. To get started, I visited Apple’s Content Provider FAQ page and filled out an application. One submits basic information such as name, address, tax ID, credit card information, and ties it all to an existing Apple account. It can take a while. I never received the account validation email I was promised. So after a few days I started inquiring in Apple’s support forum. This had happened to others. Finally I just tried connecting via web browser to itunesconnect.apple.com and it worked – I had an account to publish from.

The packaging of all material and uploading is done via the free iTunes Producer app on the Mac. iBooks Author exports the book in .ibooks format, which becomes part of the iTunes Producer package. One can also provide a free sample for the book. This can be any subset or variation of the full book, unlike with Amazon’s bookstore, where the free sample is always the first N pages.

Next, one needs to provide additional metadata such as book category, description, author name, optional sample screen shots etc. One also has to provide an ISBN (International Standard Book Number) for the book. These can be obtained from publishers or directly purchased from Bowker. This stems from the need to catalogue and identify physical books in inventory or libraries, but seems a bit anachronistic for electronic books. The prices for ISBNs are very high, especially for small volumes (1 for $125, 10 for $250, 100 for $500, 1000 for $1000). But since Bowker has a monopoly in the US you don’t have a choice in that matter. This expense seemed to be the only marginal upfront cost to publishing the book (aside from the tools to create the content).

Finally one can determine the pricing and the markets where the content is to be sold. Apple follows the agency model of book publishing: As author you get to set the price. As distributors they take a share of your proceeds, here 30%. (By contrast, in the wholesale model you sell to the distributor at a discount, say 50% of the suggested retail price; the seller has sole discretion to set the price.)

Book Review

Much has been written about the very restrictive terms and conditions Apple puts on authors using their iBooks Author tool. Essentially it locks you in as an author to sell only through Apple. For many authors that is not a viable option. It also allows Apple to reject your work at their sole discretion. So as an author you are completely at the mercy of Apple’s review process.

Apple is also strict with enforcing certain rules regarding the content it allows you to sell. For example, your book cannot contain any links to YouTube videos or Amazon books. They rejected my first revision with YouTube links and suggested to embed all videos. This would have bloated the download size of the book by more than 1 GB. As a compromise, I created short 1 min teaser versions of all videos and included those. At the end they display a screen to go to the companion website (my personal Blog) for the full versions.

After 3 revision cycles and about a week later I finally had my book on sale in 24 countries around the world, for $9.99 or the equivalent in Euro or other countries’ currencies.

Book Marketing

Publishing is not selling. Here are some of the things I did to promote my own book:

- Email – Customized note to Hotmail contacts (~ 300 contacts)

- Twitter – Tweets and direct messages to influencers for retweets (~ 2000 followers)

- FaceBook – My daughter posted on her wall (~ 1000+ friends)

Sending the emails was not without hiccups. I used MS Word and Outlook to do a mail merge with text blocks and individual text from an Excel spreadsheet. First, the Mail Merge Filter condition dialog has a bug which replicates the last AND condition and adds it as an OR condition. This screws up your filter and ends up selecting lots of folks you didn’t mean to. I found this bug during a test with the first 5 addresses. (I sent them each an apologetic email explaining this.) Then after I did the filtering all in the spreadsheet it worked and Outlook cranked out the emails. After a short while, Hotmail decided that my account had apparently been hacked and used for spam, so they locked my account down! In a way this is good, but I didn’t consider my carefully crafted and personalized emails spam. So I had to change my password and unlock my account again.

The email was very effective. I got lots of positive responses and a few folks decided to buy right away. I had sold my first copy. Every journey of 1000 miles starts with a single step.

As a result of my daughter posting the news on Facebook I noticed a spike (4x average) in the views of my Blog and Book page. I also offered promo codes for free book download to influential twitter users if they would retweet the book announcement to their followers. Within a couple of days a handful of them accepted the offer and retweeted, which exposed the tweet to a total of 2,000+ followers.

I had emailed the Apple bookstore, and to my delight they actually featured my book in their Travel & Adventure category.

Book Sales

With all these promotion efforts I couldn’t wait until the next morning to see the sales numbers. (iTunes Connect updates their sales numbers only once a day.) I had the first ratings and reviews come in, all at 5-stars. Naturally, I hoped to see the sales numbers go up. After all, I had reached hundreds, if not thousands of people, most of which either know me or are somewhat interested in adventure. The result? Tiny sales numbers. To date after one week I have sold 14 copies, with a maximum of four (4) copies per day. At my $10 price and 70% share this amounts to just under $100 for the first week. Not exactly enough to retire on.

I’ll revisit this topic at some point in the future when I have more data. Obviously, the iPad is just a fraction of the entire book market with Kindle, Nook and other devices. (Although, the iBook looks much better on the iPad than on many other readers, in particular the smaller black & white e-Ink display Kindle readers.) While the selection of titles seems comparable on Apple’s and Amazon’s bookstores, about 1.35 million each (see a spreadsheet of my recent sample here), there don’t appear to be many shoppers in Apple’s bookstore. Of course, Travel & Adventure is only a small fraction of the book market. But even there, on a day where I sold two copies my book briefly ranked 30.th in the Top Charts. 30.th out of 11,800 titles (in Travel & Adventure)! That means the other 11,770 titles sold even less than mine (i.e. one or none) during the sampling time interval. Book sales appear very unevenly distributed, another case of huge online inequality.

But more importantly, most of the people reached by my promotional efforts don’t engage to the level of actually following the links, downloading the sample and finally buying the book. From my experience, one needs to reach more than 100 people for every one book sold. Fellow adventure traveller and author Andrew Hyde – whose book coincidentally is featured just above mine in the screen-shot above – has recently written about his book sales here. His stats show a similar small fraction of sales to views. I just don’t have the millions of Twitter followers to generate meaningful sales this way!